|

I am a Visiting Fellow at the Tata Institute of Fundamental Research, India, advised by Prof. Hariharan Narayanan. I completed my Ph.D. in Electrical Engineering at the Indian Institute of Technology, Gandhinagar, India, under the mentorship of Prof. Shanmuganathan Raman. My research interests include Computer Graphics, Computer Vision, Deep Learning, Shape Analysis, Manifold Learning and Algebraic Combinatorics. Email / CV / Google Scholar / GitHub / LinkedIn |

|

|

|

|

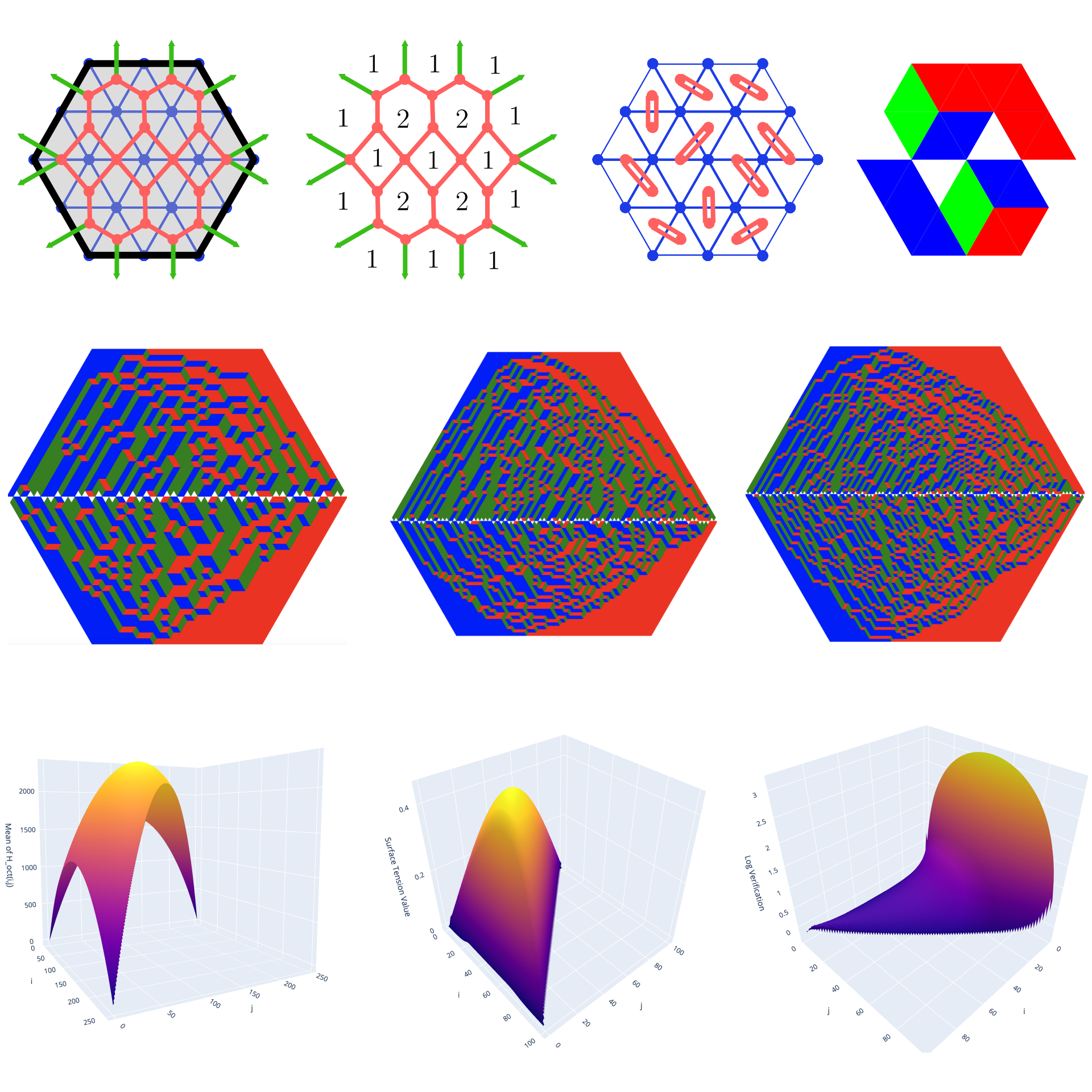

Aalok Gangopadhyay, Hariharan Narayanan. [paper] [code] [slides] [talk] We investigate the Horn problem and its randomized version, which involve determining the possible spectra of sums of Hermitian matrices with given spectra, using the hives framework introduced by Knutson and Tao. Building on previous work by Narayanan and Sheffield, we provide upper and lower bounds on the surface tension function associated with large deviations, and derive a closed-form expression for the total entropy of a surface tension minimizing continuum hive with boundary conditions arising from Gaussian Unitary Ensemble eigenspectra. Additionally, we present empirical results for random hives and lozenge tilings obtained via the octahedron recurrence, along with a numerical approximation of the surface tension function. |

|

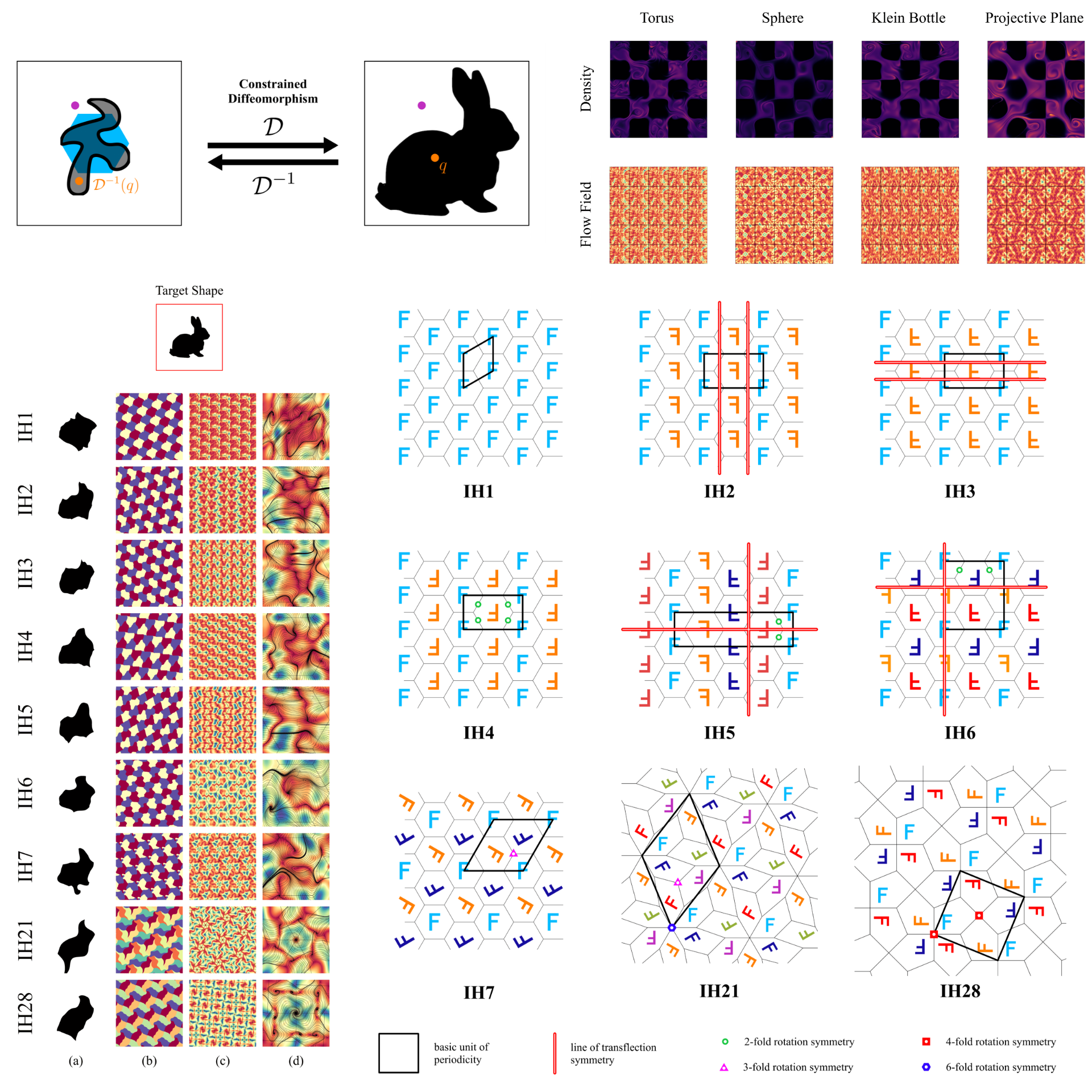

Aalok Gangopadhyay, Dwip Dalal, Progyan Das, Shanmuganathan Raman. We introduce Flow Symmetrization, a method to represent parametric families of constrained diffeomorphisms with symmetry constraints such as periodicity, rotation equivariance, and transflection equivariance. This differentiable approach is suitable for gradient-based optimization and ideal for representing tile shapes in tiling classes, addressing problems like Escherization and Density Estimation. Our experiments show impressive results for deforming tile shapes to match target shapes on the Euclidean plane and performing density estimation on non-Euclidean identification spaces like torus, sphere, Klein bottle, and projective plane. |

|

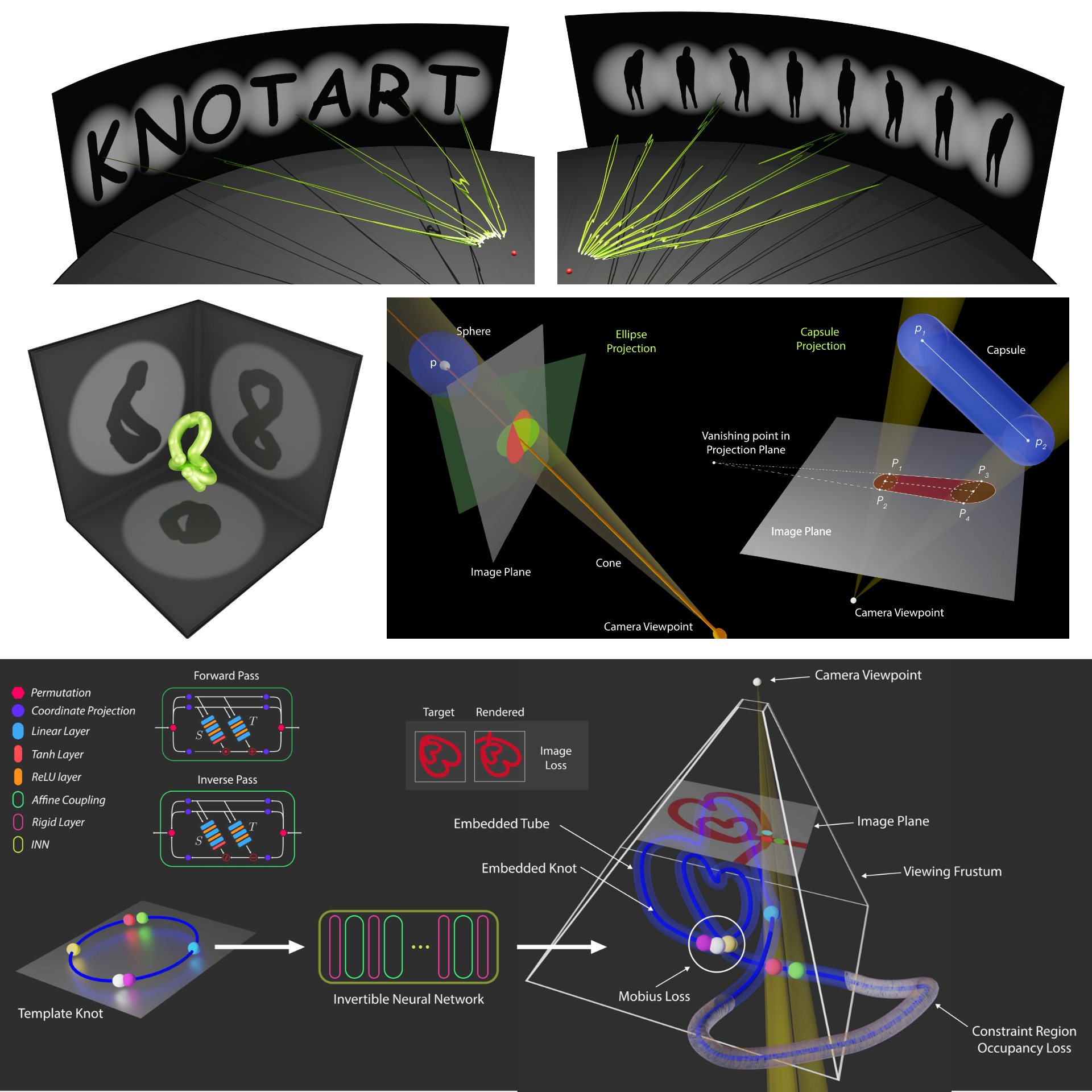

Aalok Gangopadhyay, Paras Gupta, Tarun Sharma, Prajwal Singh, Shanmuganathan Raman. [paper] [code] [slides] [talk] We address the problem of creating knot-based inverse perceptual art by finding a 3D tubular structure whose projection matches multiple target images from specified viewpoints. Our method involves a differentiable rendering algorithm and gradient-based optimization to search for ideal knot embeddings, using homeomorphisms parametrized by an invertible neural network. We impose physical constraints through loss functions to ensure the tube is feasible, self-intersection-free, and cost-effective, demonstrating the effectiveness of our approach with both simulations and a real-world 3D-printed example. |

|

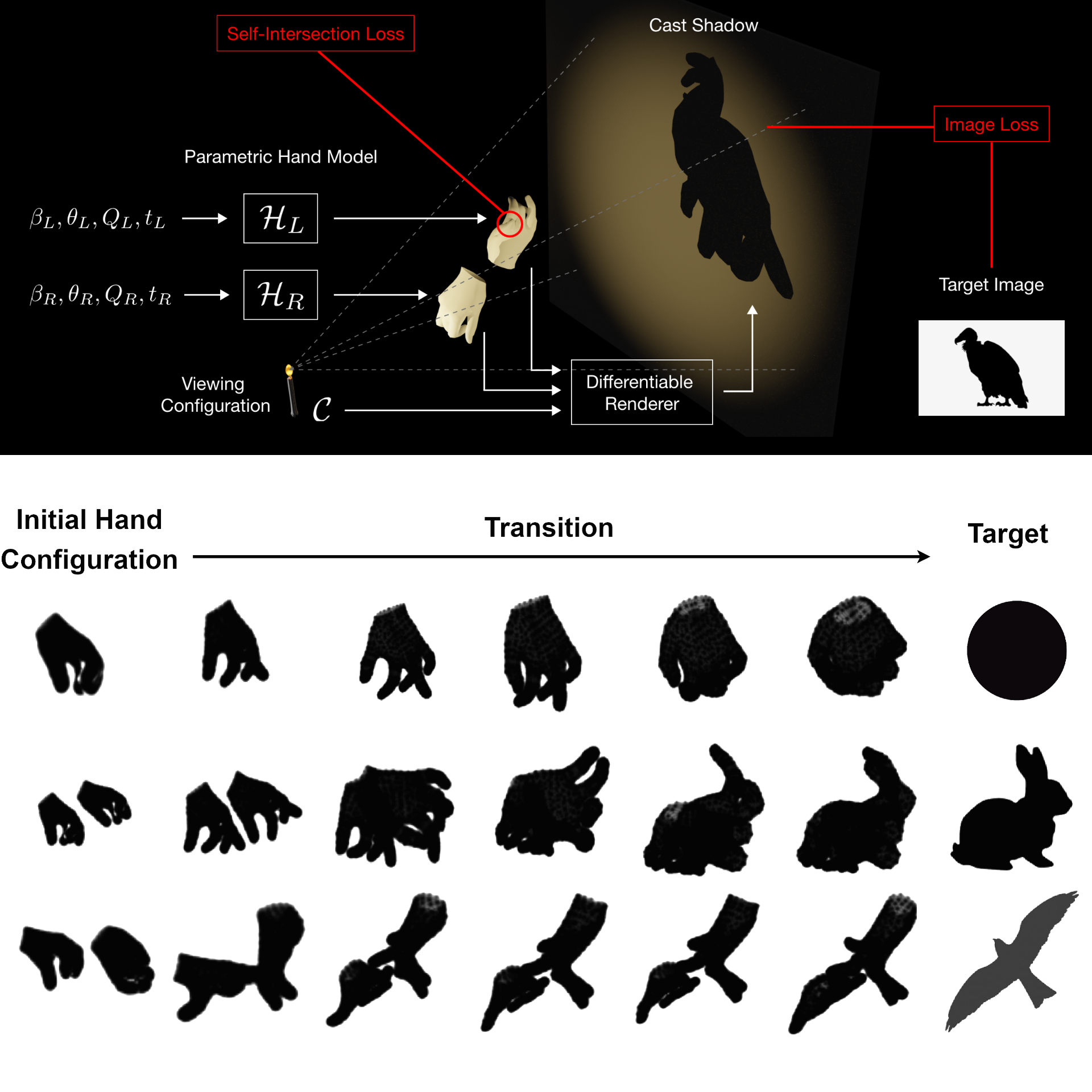

Aalok Gangopadhyay, Prajwal Singh, Ashish Tiwari, Shanmuganathan Raman. Shadow art creates captivating effects through 2D shadows cast by 3D shapes, with hand shadows (or shadow puppetry) involving the use of hands and fingers to form meaningful silhouettes on a wall. This work introduces a differentiable rendering-based approach to deform hand models, enabling them to cast shadows that match a desired target image and lighting configuration. We demonstrate results with shadows cast by two hands and interpolate hand poses between target shadow images, offering a valuable tool for the graphics community. |

|

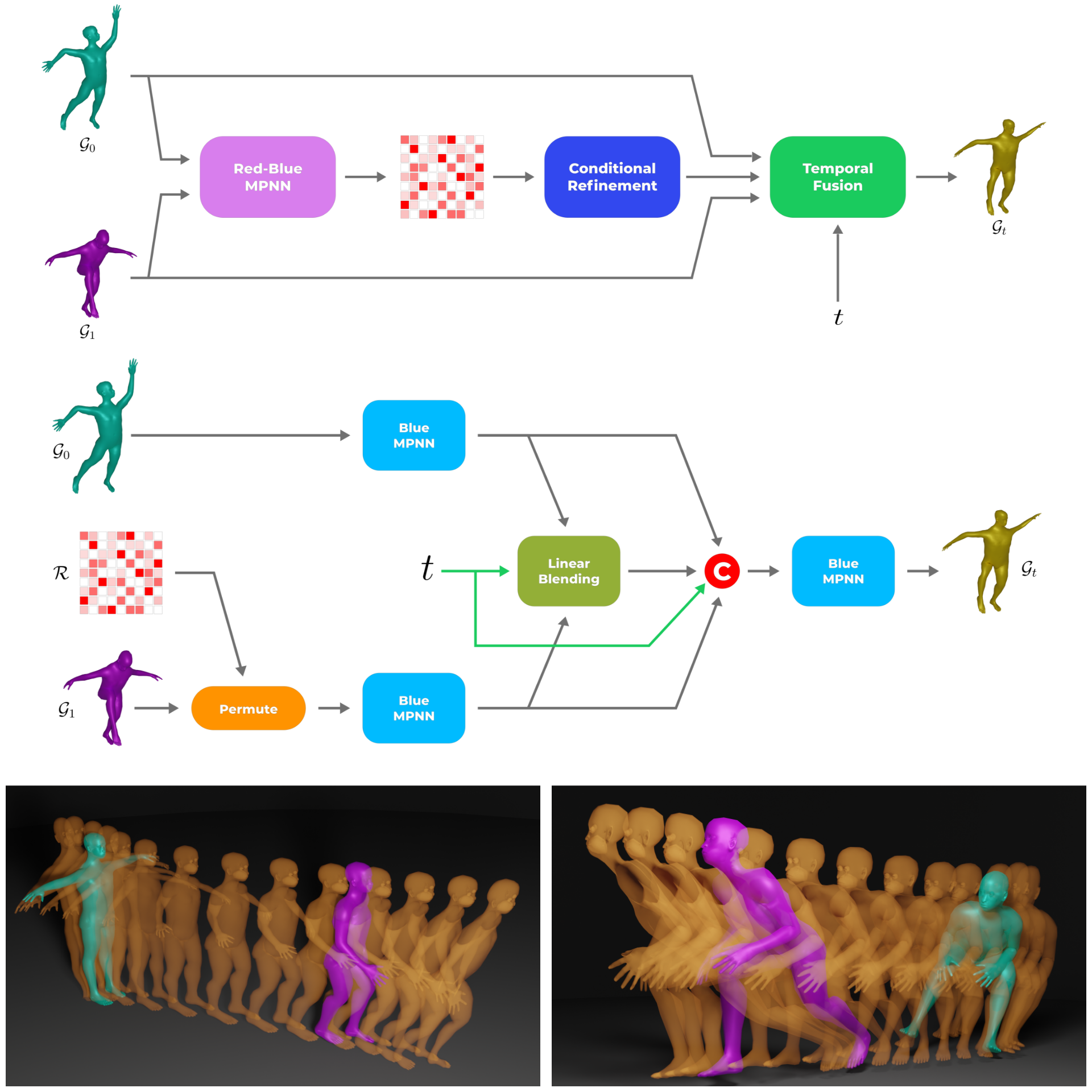

Aalok Gangopadhyay, Abhinav Narayan Harish, Prajwal Singh, Shanmuganathan Raman. We propose a self-supervised deep learning framework for mesh blending when meshes lack correspondence, using Red-Blue MPNN, a novel graph neural network that estimates correspondence via an augmented graph. We introduce a conditional refinement scheme to find exact correspondence under certain conditions and develop another graph neural network to process aligned meshes and time values to generate desired results. Our large-scale synthetic dataset, created using motion capture and human mesh designing software, shows that our approach produces realistic body part deformations from complex inputs. |

|

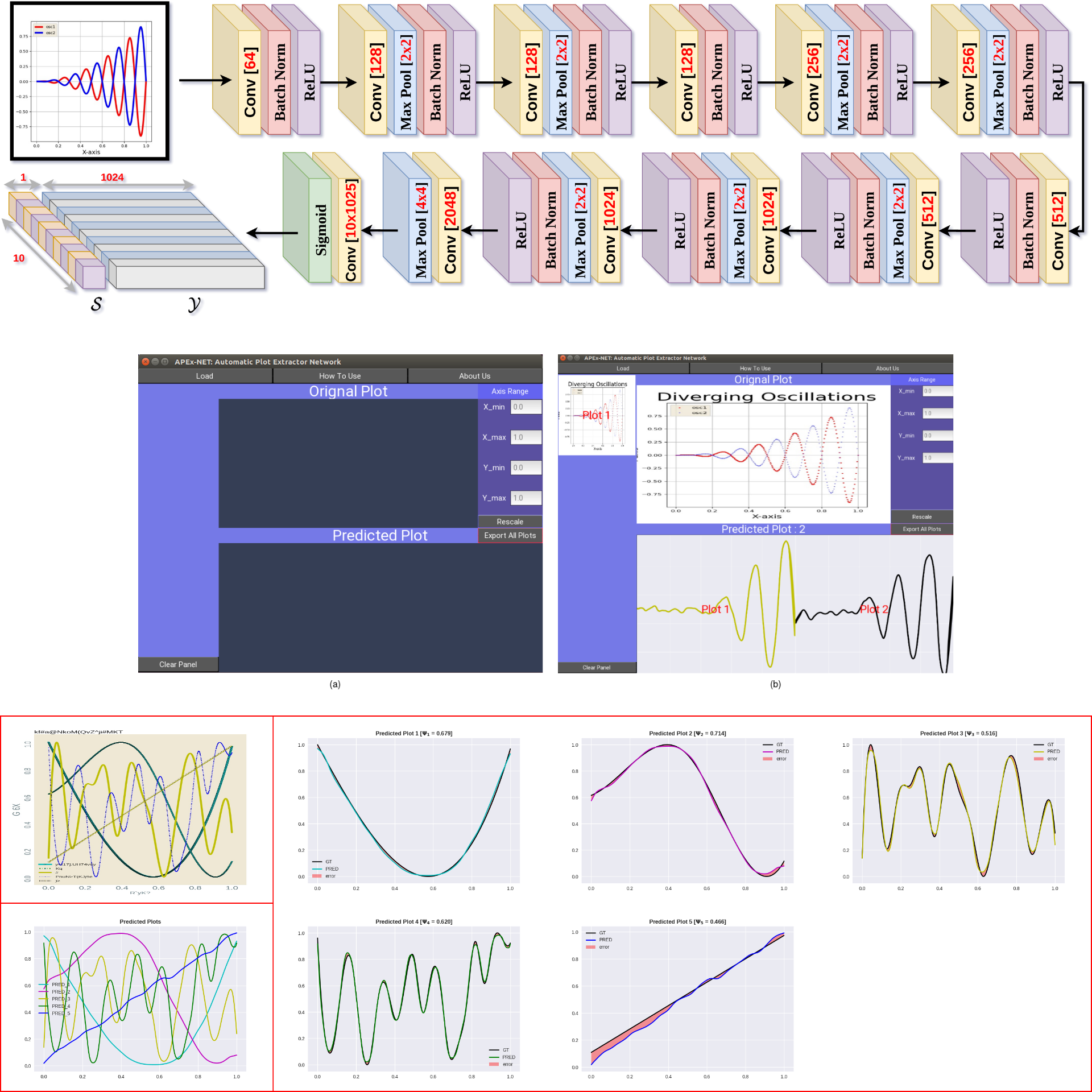

Aalok Gangopadhyay, Prajwal Singh, Shanmuganathan Raman. [paper] [code] [slides] [talk] Automatic plot extraction involves identifying and extracting individual line plots from images containing multiple 2D line plots, a problem with many real-world applications that typically requires significant human intervention. To reduce this need, we propose APEX-Net, a deep learning framework with novel loss functions, and introduce APEX-1M, a large-scale dataset containing plot images and raw data. APEX-Net demonstrates impressive accuracy on the APEX-1M test set and effectively extracts plot shapes from unseen images, with a GUI developed for community use. |

|

Aalok Gangopadhyay, Shashikant Verma, Shanmuganathan Raman. We introduce Deep Mesh Denoising Network (DMD-Net), an end-to-end deep learning framework for mesh denoising, utilizing a Graph Convolutional Neural Network with aggregation in both primal and dual graphs through an asymmetric two-stream network with a primal-dual fusion block. The Feature Guided Transformer (FGT) paradigm, comprising a feature extractor, transformer, and denoiser, guides the transformation of noisy input meshes to achieve useful intermediate representations and ultimately denoised meshes. Trained on a large dataset of 3D objects, our method demonstrates competitive or superior performance compared to state-of-the-art algorithms, showing robustness even against extremely high noise levels. |

|

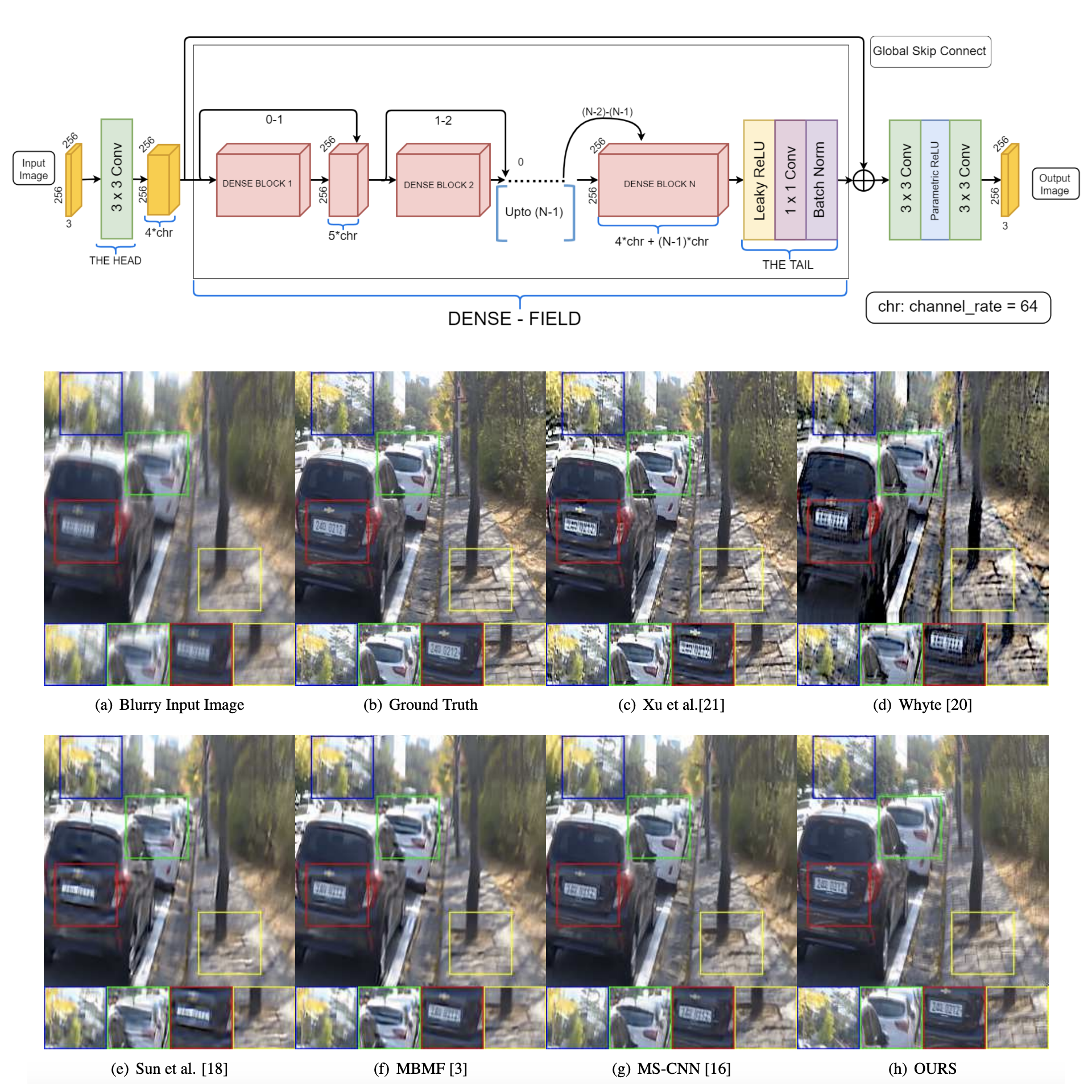

Sainandan Ramakrishnan, Shubham Pachori, Aalok Gangopadhyay, Shanmuganathan Raman. Removing blur caused by camera shake is challenging due to its ill-posed nature, with motion blur inducing a spatially varying effect. This paper proposes a novel deep filter using Generative Adversarial Network (GAN) architecture with global skip connection and dense architecture to address this issue without needing blur kernel estimation, thus reducing test time for practical use. Experiments on benchmark datasets demonstrate that the proposed method outperforms state-of-the-art blind deblurring algorithms both quantitatively and qualitatively. |

|

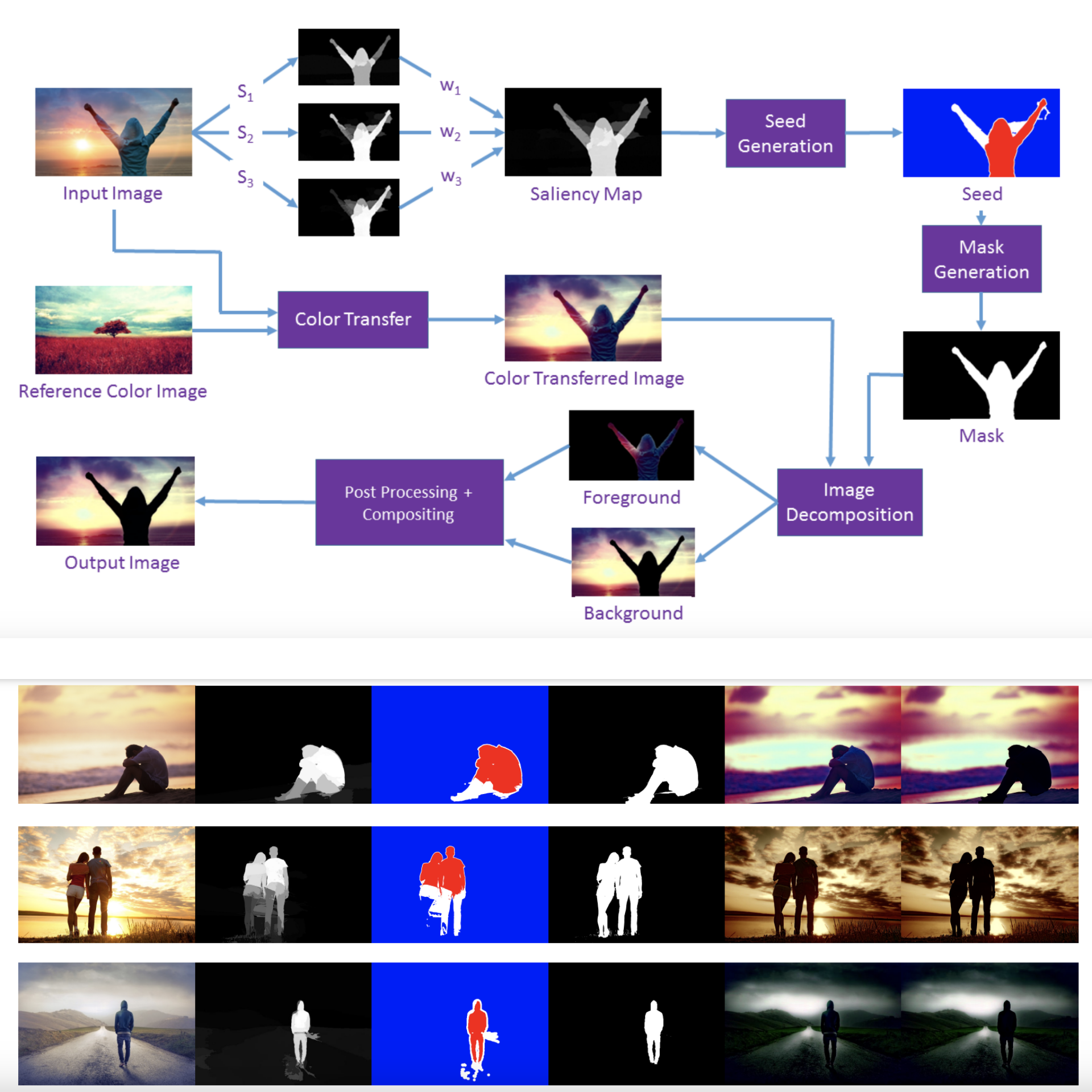

Aalok Gangopadhyay, Shubham Pachori, Shanmuganathan Raman. This paper addresses the challenge of automatically generating silhouette images from natural scene photographs. The key technique involves darkening the foreground and illuminating the background to achieve the silhouette effect. The proposed computational pipeline successfully produces high-quality silhouette images from any given image with a foreground object and background. |

|

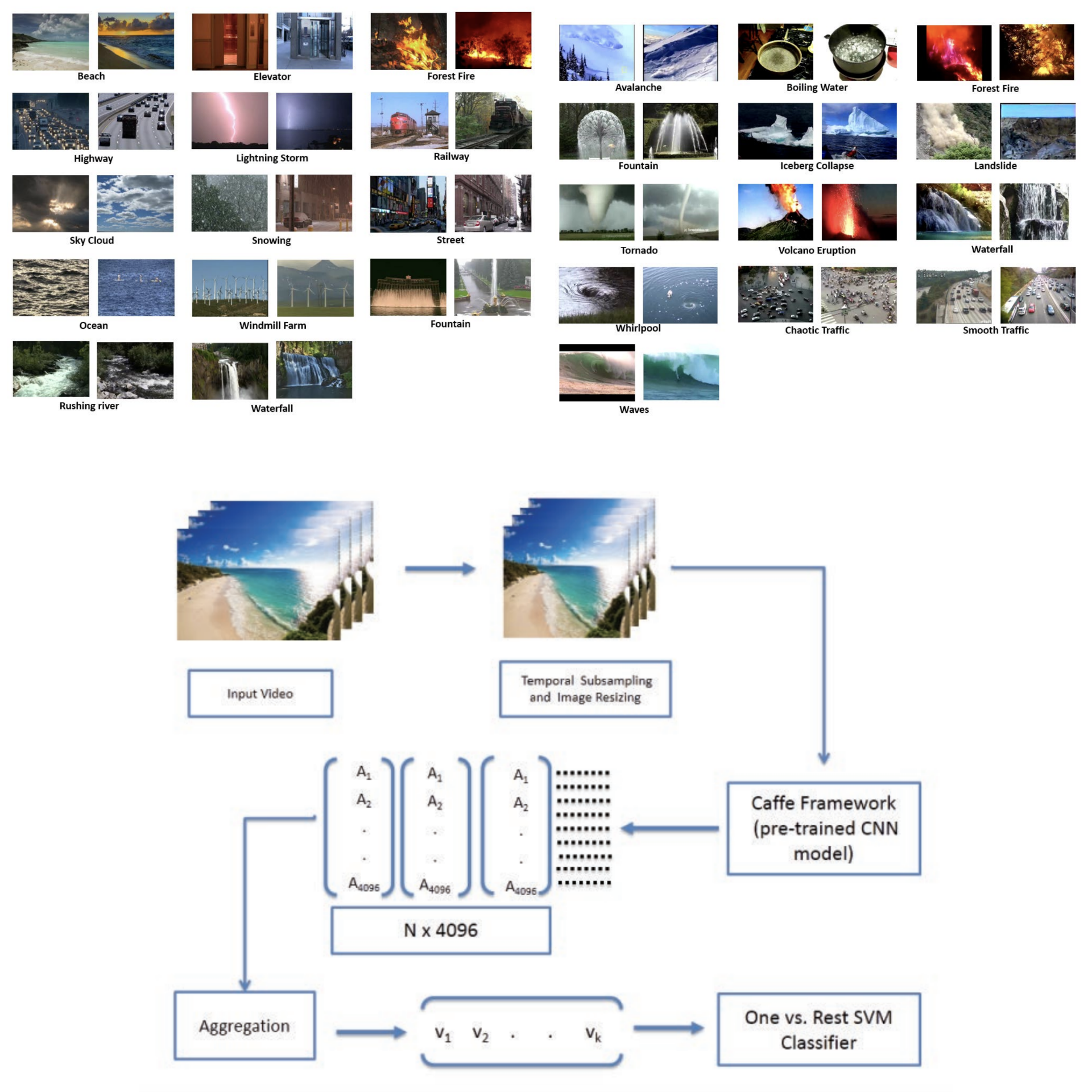

Aalok Gangopadhyay, Shivam Mani Tripathi, Ishan Jindal, Shanmuganathan Raman. [paper] Classifying videos of natural dynamic scenes, especially with dynamic cameras, is challenging. This paper analyzes statistical aggregation techniques on pre-trained convolutional neural network (CNN) models to create robust feature descriptors from CNN activation features across video frames. Results show that this approach outperforms state-of-the-art methods on the Maryland and YUPenn datasets, effectively distinguishing dynamic scenes even with dominant camera motion and complex dynamics, and includes an extensive comparison of various aggregation methods. |

|

Thanks to Jon Barron for this awesome template and Pratul Srinivasan for additional formatting. |